"When will the project be done?" - or: "We need this project to be finished by (this deadline). Can you do this?" are typical questions which senior managers expect project managers to be able to answer. And the answer to this question will not go away in an agile environment - because other initiatives may be linked to the project. When there is no project manager, the team (and in a Scrum text, the Product Owner) should still be able to provide an answer.

Bad answers

"We're Agile. We don't do Projects any more."

Ha ha ha. Very funny. There will still be projects, although they will be within a different context. Instead of pulling up random groups of people to deliver a prepackaged bunch of work, we rely on dedicated teams with a clearly delineated responsibility for products, components or features. These agile teams will take those work packages called "projects" and deliver them.

A "Project", in an agile context, is nothing more than a label attached to a number of backlog items.

The project begins when we pull the first backlog item and ends when we complete the last.

If you so like, you might put the entire project into a single backlog item of pretty big size, something many teams call "

Epic" and the result is often referred to as a "

(Major) Release".

"We're Agile. We don't know"

What could be a better way to brush up a manager (and/or customer) the wrong way? Imagine if you were a developer and would order a new server and asked the admins and/or web host "

When can we have this server?" or "

Can we have this server by next Friday?" - would you consider this as a satisfactory answer? If not, you can probably empathize with a sales manager or customer for not being satisfied with this answer, either.

While there is definitely some truth that there are things we don't know, there are also things that we do know, and we are well able to give information

based on the best information we have today.

And here is how you can do that:

A satisfactory answer

Based on the size of your project in the backlog and your velocity, we can make a forecast.

To keep things simple, we will use the term "Velocity" in a very generic way to mean "

Work Done within an Iteration", without reference to any specific estimation method. We will also use "

Iteration" to mean "

A specific amount of time", regardless of whether that amount is a week, a calendar month or a Scrum Sprint. Even outside a Scrum context, we can still figure out how many days elapse over a fixed amount of time.

If we have an existing agile team, we should have completed some work already. As soon as we have delivered something, we can make forecasts, which we shall focus on in this article.

Disclaimer: This article does NOT address sophisticated estimation methods, statistical models for forecasting and/or progress reporting. It discusses principles only. It is easily possible to increase the level of sophistication where this is desired, needed and helpful. Information on these topic can be found on Google by searching for "Monte Carlo Method", "Agile Roadmap Planning" and "Agile Reporting".

For those interested in a mathematically more in-depth follow-up, please take a look at this post by William Davis.

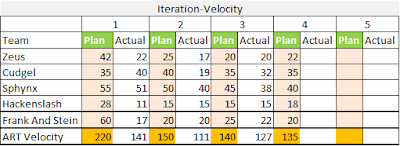

We need some data

Maybe we haven't delivered anything yet, and then it's all guesswork.

In this case, the best thing we can do is spend a few weeks and get things done. Let us collect data and make a forecast from there, so that we get data to build our forecast upon it.

What would be the alternative? Any forecast not based on factual evidence is pure guesswork.

The more time we spend without delivering value, the later our project will be.

We can make a forecast

We can use our historic data to make various types of forecasts.

The most relevant management forecasts are the date and scope forecast, which will also allow us to make a risk forecast. We will first examine the date forecast.

Let's take an example project:

- Our project requires 200 points.

- Our Velocity over the last 5 Sprints has been 8,6,20,7,9.

Management Questions:

- Can it be delivered in 22 Iterations? (Due Date Status)

- When will it be delivered? (ETA)

- What will be delivered in 22 Iterations? (Project Scope)

Let's look at a schematic diagram based on Cumulative Flow, and then examine the individual forecasting lines in more detail below:

|

| Fig. 1: A schematic overview how we can forecast dates and risks for Agile Projects |

Averaged Completion

Probably the most common way to make a forecast is to average the velocity over the last few intervals and build the forecast from there. The good thing about this "average forecast" is that it's so simple.

Our example gives us an Average Velocity of 10.

Our forecast would be:

- Due Date Status: Green.

- ETA: 20 Iterations.

- Project Scope: 100%

The consequence will be that the team will be given 20 Iterations for completion.

The big issue of Average Completion is that any small change or unpredicted event will devastate the forecast.

How to use Average Completion

Average Completion is possible based on historic data. The Average Date would be the

earliest suggested ETA date that you may want to communicate to the outside world. The Averaged Scope would be the corresponding

committable scope on the Due Date - you have a 50% probability to make it!

How not to use Average Completion

The Average Completion Date will likely shift with every Iteration, and as soon as we fall behind plan, there's a significant risk that we won't catch up, because the future isn't guaranteed to become more favorable just because the past hasn't been favorable. As soon as our Velocity is lower than average, we're going to report Amber or Red and must reduce scope.

Management will have to expect these bad news on every single Iteration Review.

This puts our team between a rock and a hard place, becoming known as a constant bringer of bad news, so you may prefer to

not rely on the averaged completion date.

Minimal Completion

We can also take the best velocity achieved over a single Iteration and forecast the future from there. It gives us - at least - an earliest possible delivery date for the project. It's purely hypothetical, but it allows us to draw a boundary.

Our example would give us a Maximum Velocity of 20.

Our forecast would be:

- Due Date Status: Green.

- ETA: 10 Iterations.

- Project Scope: 100%

How to use Minimal Completion Dates

The "Minimal Completion Date" is a kind of reality check. If management would expect the project to be delivered in 8 Iterations, and your Earliest Forecast already says 10, you don't even have the potential means to succeed.

Every date suggested before the Minimal Completion Date is wishful thinking, and you're well advised to communicate Status: Red as soon as this becomes visible.

How not to use Minimal Completion Date

Some organizations like best case forecasts and then make plans based on these.

I don't need to go into the madness of building our finance forecast on winning the lottery 20 times straight in a row - and using best cases is exactly this kind of madness.

You will fail if you plan for earliest completion date.

Expected Completion

The best way to use our historic velocity is by removing statistical outliers. Unusually large amounts of work completion are normally based on "spill-over", that is, work that wasn't finished before and therefore wasn't really done within the iteration period. Alternatively, they might have been the result of work items being unusually fast to complete, and common sense dictates to not consider unusual events to be usual. Therefore, we create a purified average eliminating unusually high figures.

Our example would give us an Expected Velocity of 7.5.

Our forecast would be:

- Due Date Status: Amber.

- Earliest ETA: 27 Iterations.

- Project Scope: 80%

This means that we can commit to delivering either 80% of the scope by the due date, or that we need to move the Due Date by 5 Iterations to deliver the 100% of the scope. This creates options and opens room for negotiation.

How to use Expected Completion

The realistic completion date is what we can communicate to the outside world with a decent amount of confidence. Unpredicted events that are not too far out of the norm should not affect our plan.

While many stakeholders would try to haggle around the Expected Completion Date in order to get an earlier commitment, we have to state clearly that every calendar day earlier than forecasted increases the risk of not meeting expectations.

We can indeed reduce the project's scope in order to arrive at an earlier date, and if there is a hard deadline, we can also slice the project into two portions: "

Committed until Due" and "

May be delivered after Due".

The good news is that in most contexts, this will satisfy all stakeholders.

The bad news is that Part 2 will usually be descoped shortly after the Due Date, so any remaining technical debt spilled over from Part 1 is going to be a recipe for disaster.

How not to use Expected Completion

Some organizations think that the interval between average and expected completion date is the negotiation period and if the due date is between these dates, they will call it a match.

I would rephrase this interval to be the "

period that will predictably be overrun".

Worst Case Completion

The absolute worst case is that no more gets finished than we have today - so the more we get done, the more value is guaranteed, but let's ignore this scenario for the moment.

It's realistic to assume that the future could be no better than the worst thing which happens in the past. We would therefore assume the worst case completion to be based on the minimal velocity in our observation period.

Our example would give us a Minimum Velocity of 6.

Our forecast would be:

- Due Date Status: Red.

- Earliest ETA: 34 Iterations.

- Project Scope: 60%

How to use Worst Case Completion

The Worst Case scenario is the risk that people have to take based on what we know today.

Especially in environments where teams tend to get interrupted with "other important work", receive changing priorities or suffer from technical debt, it may be wise to calculate a worst case scenario based on minimum velocity in the observation period.

Worst Case Completion normally results in shock and disbelief, which can be a trigger for wider systemic change: The easiest way to get away from worst case completion dates is by providing a sustainable team environment, clear focus and unchanging priorities.

How not to use Worst Case Completion

If you commit only to Worst Case Date and Scope, you're playing it safe, but you're damaging your business. You will lose your team's credibility and trust within the organization and may even spark the question whether the value generated by your team warrants the cost of the team, so you risk your job.

Quantifying risks

You can derive data as follows from the dates and numbers you have:

- The expected completion date is when the project will likely delivered as scoped.

- We have an predictable overrun my the duration which the average date moves past the due date.

- The predictable scope risk by the due date is the full scope minus the expected scope.

- The maximum project risk is the full scope minus worst case scope.

- The maximum project delay is the diration which the worst case date is beyond the due date.

Managing risks

We can manage the different types of risks by:

- We increase confidence in our plan by working towards the Expected Date and Expected Scope.

- We reduce date overruns by adjusting our Due Date towards Expected Date.

- We reduce scope risks by adjusting project scope towards Expected Scope on Due Date.

- We reduce project cost by reducing project scope.

- We reduce project duration by reducing project scope.

Dealing with moving targets

Changes to the project are very easy to manage when we know our project backlog and velocity:

- The addition of backlog items:

- moves our date forecast further to the right,

- reduces the % scope on a fixed date,

- increases scope risk and overrun risk.

- The removal of backlog items:

- moves our date forecast further to the left,

- increases the % scope on a fixed date,

- decreases scope risk and overrun risk.

Agile Project Reporting

Based on the above information, you can communicate the current and forecasted project status in an easy matrix form, just taking this one as an example:

| Descriptor | Date | Scope on Due Date | Status |

|---|

| Due Date | December 2020 | 100% |

Amber

|

| Change Period | June 2019 | +5% |

Amber

|

| Expected Date

| March 2021

( +3 months) | 80%

(-20%) |

Green

|

| Worst Case | December 2021

(+12 months) | 60%

(-40%) |

Green

|

| Known Risks

| 3 months overrun | 20-40% missing |

Amber

|

Fig. 2: An example status report for Agile Projects

This gives stakeholders high confidence that you know what you're doing and provides the aforementioned options of moving the project back to "

Status: Green" by moving the Due Date or by reducing scope, or a combination thereof.

Since you have access to your backlog, you can even propose a number of feasible suggestions proactively, for example:

Option 1

1. Accept moderate likelihood of running late.

2. Ensure continued Project funding until completion.

Consequence: Due Date December 2019 might overrun up to 3 months.

Option 2

1. Add Project Phase 2 from January 2020 to March 2020

2. Move Backlog Items #122 - #143 into Phase 2

3. Provide Project funding for Phase 2.

Result 1: Due Date December 2019 met.

Result 2: 100% planned Scope delivered by March 2020.

Option 3

1. Descope Backlog Items #122 - #143

Result: 100% reduced Scope delivered in December 2020.

Fig. 3: An example risk mitigation proposal for Agile Projects

Most likely, stakeholders will swiftly agree to the second or third proposal and you can resume working as you always did.

Outcome Reporting

The above ideas simply rely on reporting numbers on the "Iron Triangle", and in some cases, executive managers ask for this. In an agile environment, we would prefer to report outcomes in terms of obtained value and business growth.

Even when such numbers as above are required, it's a good idea to spice up the report by providing quantified results such as "X amount of new users", "Y amount of business transactions", "Z dollars earned" wherever possible. This will help us drive the culture change we need to succeed.

Closing Remarks

As mentioned in the initial disclaimer, this article is merely an introduction to things you can try, pragmatically, and then Inspect+Adapt from there until you have found the thing which works best from there.

The suggested forecasting method, risk management and status reports are primitive on purpose. I will not claim that they result in an accurate forecast, because the biggest risk is in the Unknown of our plan: We could be working towards a

wrong goal, the known backlog items could be

missing the point or the backlog items could be

insufficient to meet the project target.

It's much more important to clarify the Unknowns than to build a better crystal ball around huge unknowns, and I believe that it's better to keep estimation and forecasts as simple and effortless as possible, and spend more effort into eliminating the Unknowns.

The best possible agile project delivery strategy relies on the early and frequent delivery of value to eliminate critical Unknowns and maximize the probability of a positive outcome.

Frankly, it doesn't matter how long a project takes or whether the initial objective has been met when every Iteration has a positive Return on Invest - and neither does it matter that a project's initial objectives were met when it's a negative overall Return on Invest.