But when you can't - you need to find a way to deal with the problems introduced by an organization sized equally to multiple enterprises collaborating in (near) real time. And SAFe has a proposal how to get you started on that one, too.

Defining the value stream

What's a value stream? Simply put, it's all stuff happening "from customer (demand) to customer (satisfaction)". In some enterprises, that's obvious - while in others, it may be hard to grasp.An example value stream

Let us take an example, "What is the value stream of a smartphone?" - That depends. When you are talking about a telco carrier, you as a customer sign a contract, get a SIM card and a device, register it - and start calling. You then get monthly invoices and that's it. From customer side.

But what is going on in the background:

To get a contract, you select a package typically considering of tariffs, prices, products, options and bundles that will be assigned to your customer account. All of this stuff handled in so-called "business support systems" (BSS). As customer, you don't care much how they do that, but BSS platforms are often provided by specialized organizations due to their complexity. It might even be fair to call this an independent product. It may be adequate to label BSS platforms a "product" in it's own right, required not by you, the customer - but by the Telco carrier in order to serve their customers. Depending on the carrier, in this line alone you might find 500+ people working.

Next, of course, you want to make a call. But for that, your device must be activated in the telco network. That requires some interaction between the BSS and the network stations. For simplicity sake, let's just say that the physical network is yet another sub-product required to provide service for you, but ordered by the carrier.

There's also a product line called "Operations Support Systems" (OSS) taking care of that. There's major corporations doing only the Network base stations stuff, and there's major corporations doing only OSS stuff. The things going on here are highly technical and interest nobody except operators, but otherwise you couldn't make a simple phone call.

This means our example value stream actually consists of three product lines, only two of which are exposed to you as a customer. In each of these product lines, some magic happens so that you get to make your call.

So, here's what the value stream would look like:

|

| A value stream perspective for a mobile network operator |

Continuing with our example, let's just assume we are dealing with a so-called "Virtual Network Operator" (MVNO) who does not have their own network. In this case, the "Network" and even the OSS would be a purchased service, provided as closed black box. Our own development would be using the output of these value streams, but would not be directly interacting with them in the process, so our SAFe organization would embed, but not directly touch them.

But we still have a problem: There's the BSS teams providing value to end customers by setting up new product lines and also those who provide value to our own business with stuff like accounting, tax records, audit reporting and yada (plus our black box technical value streams providing OSS and Network services for end customer value) - but they're too many to organize in a single Agile Release Train (ART). Now what?

Splitting up the value stream into multiple ARTs

An Agile Release Train can accomodate anywhere from 50-150 developers. Once we get beyond that, stuff like the Dunbar number and regular organizational complexity get into our way. So we need to keep the ART at a sensible size, while still being able to deliver useful products to our customers.Here are some splitting strategies. Please note that while the terms "Bad", "Better", "Best" are definitely judgmental, there may still be pressing reasons to follow a specific approach.

A "bad" choice is still better than paralysis.

Bad: Component split

Probably the most obvious form of splitting is a technical component split, allowing developers to focus on a specific subset of technical systems. While that is possible, it's a great way of maximizing dependencies and coordination overhead while minimizing productivity and customer value. We don't want to go there.Better: Feature category split

In our example, we might consider splitting the value stream around categories such as tariffs, campaigns and infrastructure. These kind of feature areas would be a good starting point to form a feature team organization that can deliver end-to-end customer value. Of course, there will still be dependencies - but far less than a component setup.Best: Customer segment split

Probably the most common form of splitting may be "private customers", "business customers", "VIP" and "internal customers", having feature teams serve each customer segment independently. With this approach, strategic management can easily decive to boost/reduce the growth of a customer segment based on how many people would be working in the respective segment. Of course, there's also interaction between the segments, but with a robust product, these should never be game breakers.

Setting up multiple ARTs

So, after identifying how we want to split up our value stream, keeping in mind that each split should be between 50 and 150 developers in size, we'll end up with multile independent Agile Release Trains, together forming a Value Stream.

After reaching clarity which developer is assigned to which ART (just for clarity sake: every developer works on one ART, every agile team is part of one ART) there are multiple ART's to launch and coordinate.

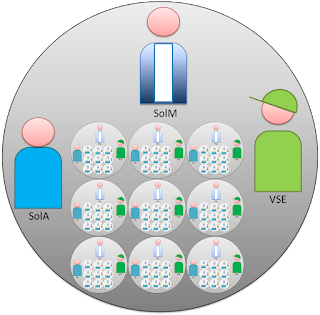

Here is the proposed SAFe structure for setting up multiple ART within a single value stream:

|

| The Value Stream Level - a team of ART's |

This one should cerate a deja vu, as it looks exactly the same way an Agile Release Train is set up - and this similarity is intentional.

In another article, we will describe in more detail how the roles and responsibilities change in comparison to a single ART when this form of split occurs.

Summary

Coordination at Value Stream Level becomes an issue when more than 150 developers collaborate on the same product - and even then, the complexity of what you do depends highly on how your organization is set up. On Value Stream Level, you may have multiple ART's sliced in different setups, you may have black boxes of consumed services etc.

Going into this level of complexity is only necessary for the largest product groups. SAFe provides a way for them to get started in a structured way even there are too many people to coordinate within a single ART.

Do not set up the added complexity Value Stream level coordination unless inevitable.